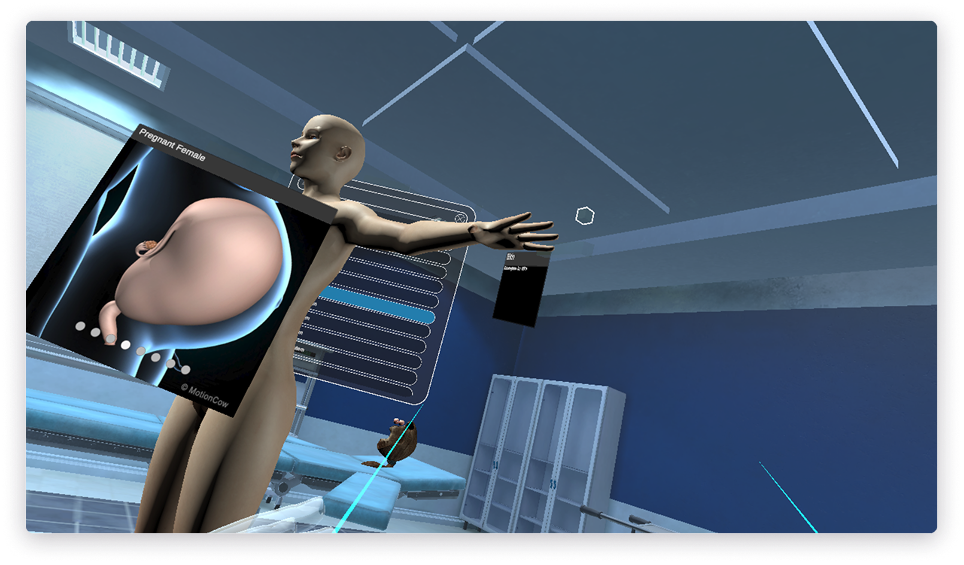

The primary challenge was to create a highly interactive and accurate anatomical model that could be manipulated using both hand gestures and UI while maintaining smooth performance on mobile XR devices. Additionally, it needed to support multi-user sessions for real-time collaboration and scenario management, which required significant optimization

The client approached us to expand their existing AR tablet app into a fully immersive VR/MR application with improved interaction, networked collaboration, and scenario-based learning capabilities, making it a complete educational solution.

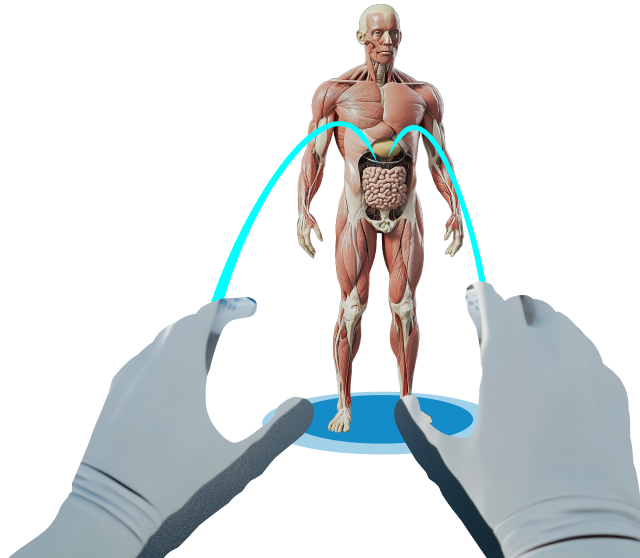

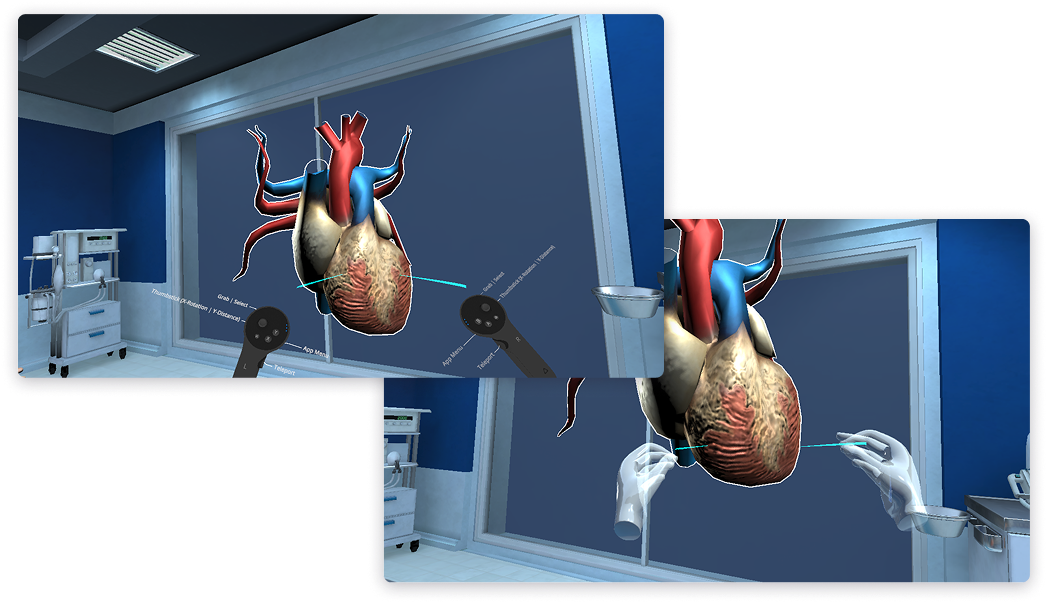

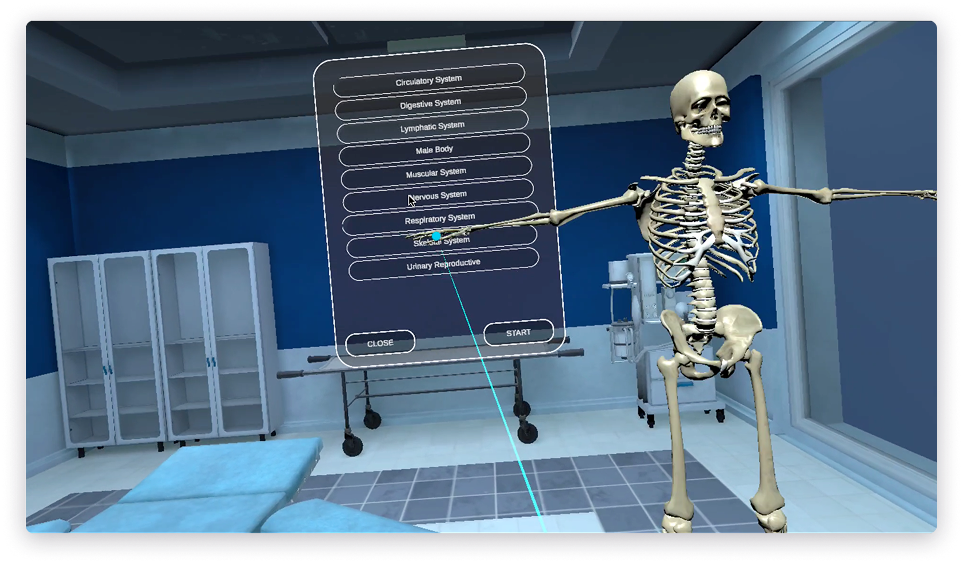

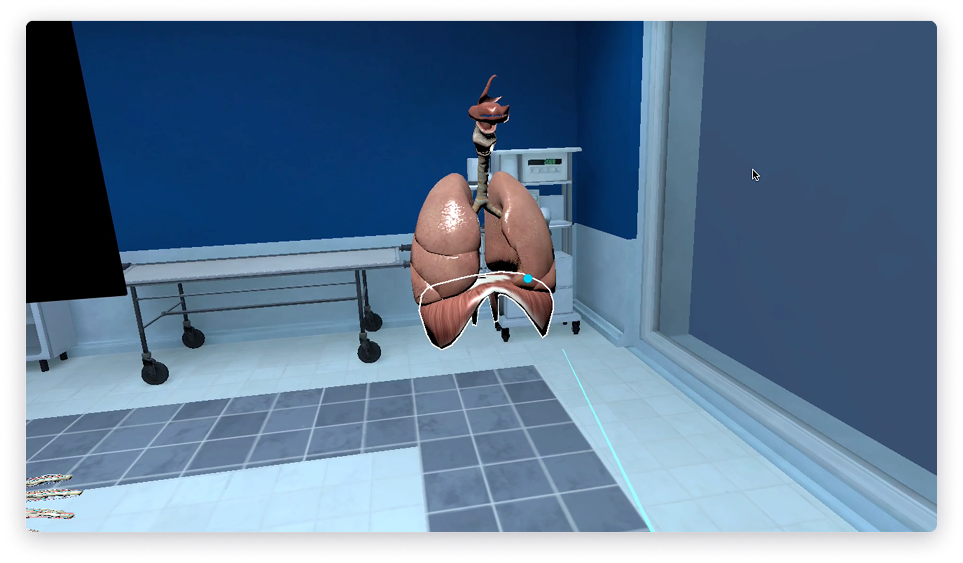

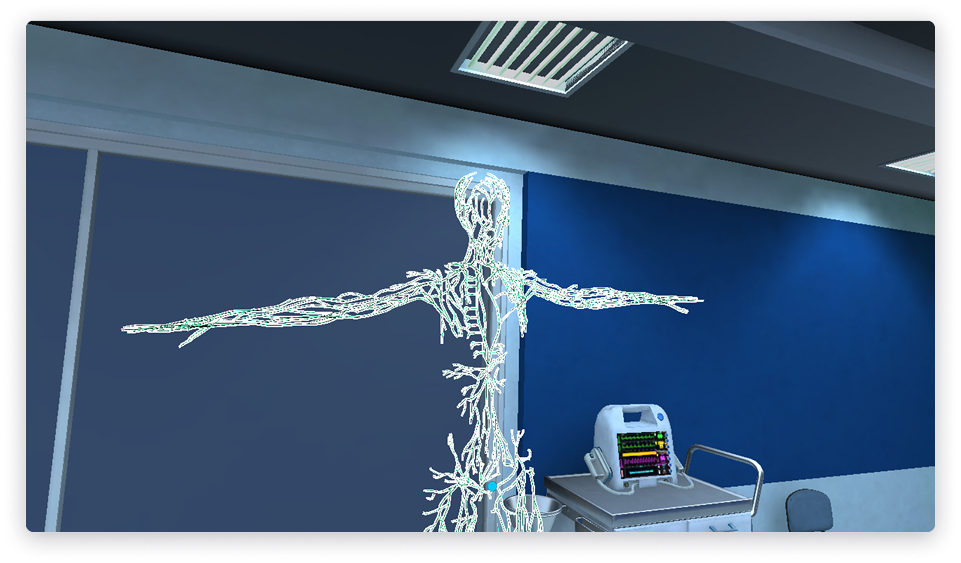

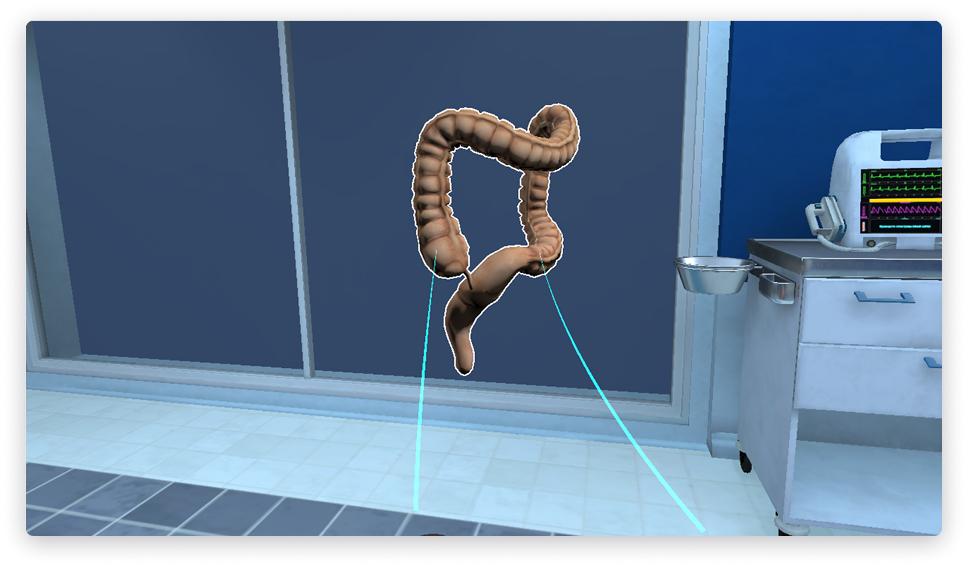

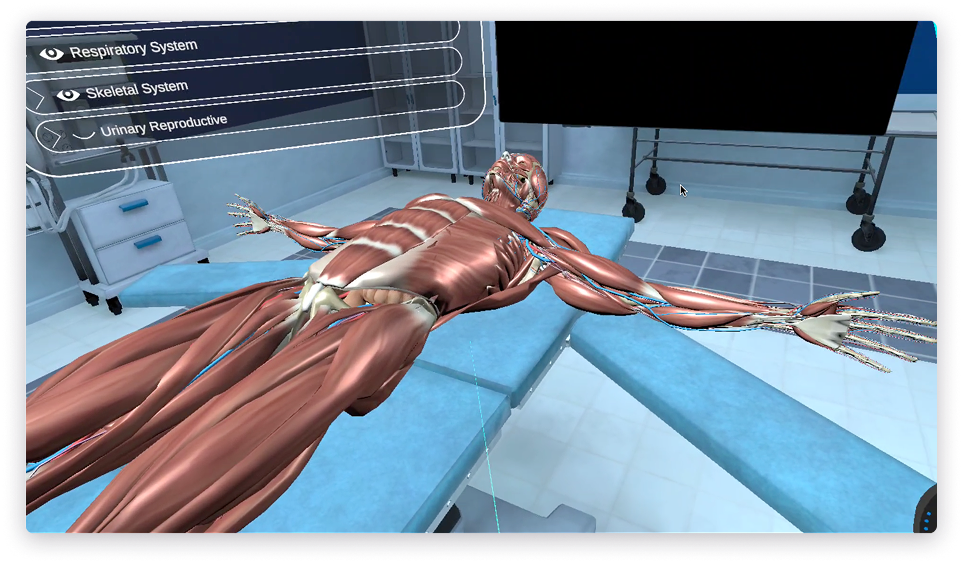

We began by integrating a detailed human anatomy model, building an intuitive UI for manipulating 990 distinct body parts, and implementing a gesture-based interaction system. It was decided to optimized the graphics using the Universal Render Pipeline (URP) to ensure the application ran smoothly on mobile hardware. Photon Fusion was used to enable real-time collaboration for up to 20 participants, allowing shared interactions within the virtual space. This phase also included a local licensing system for access control and updates.

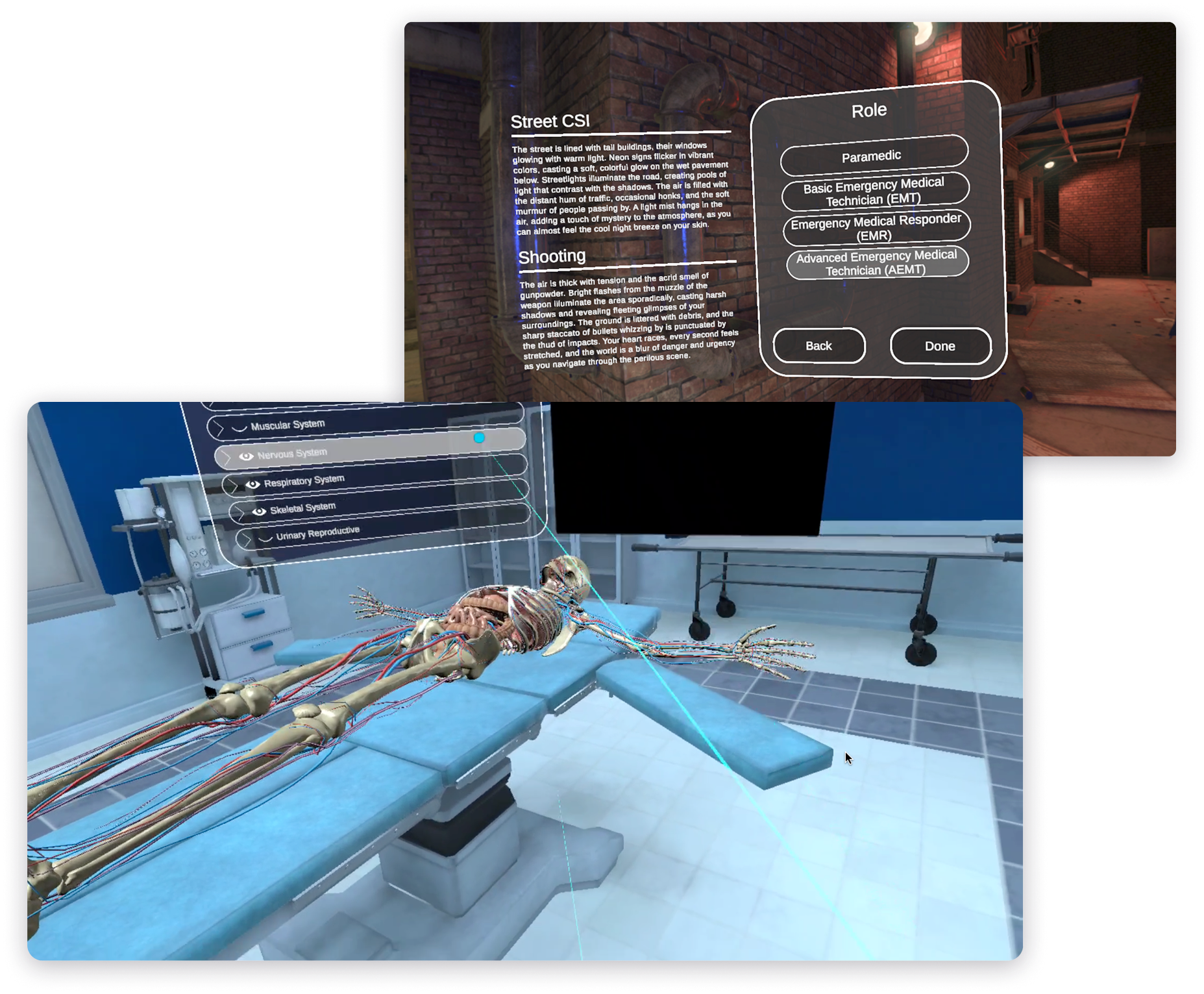

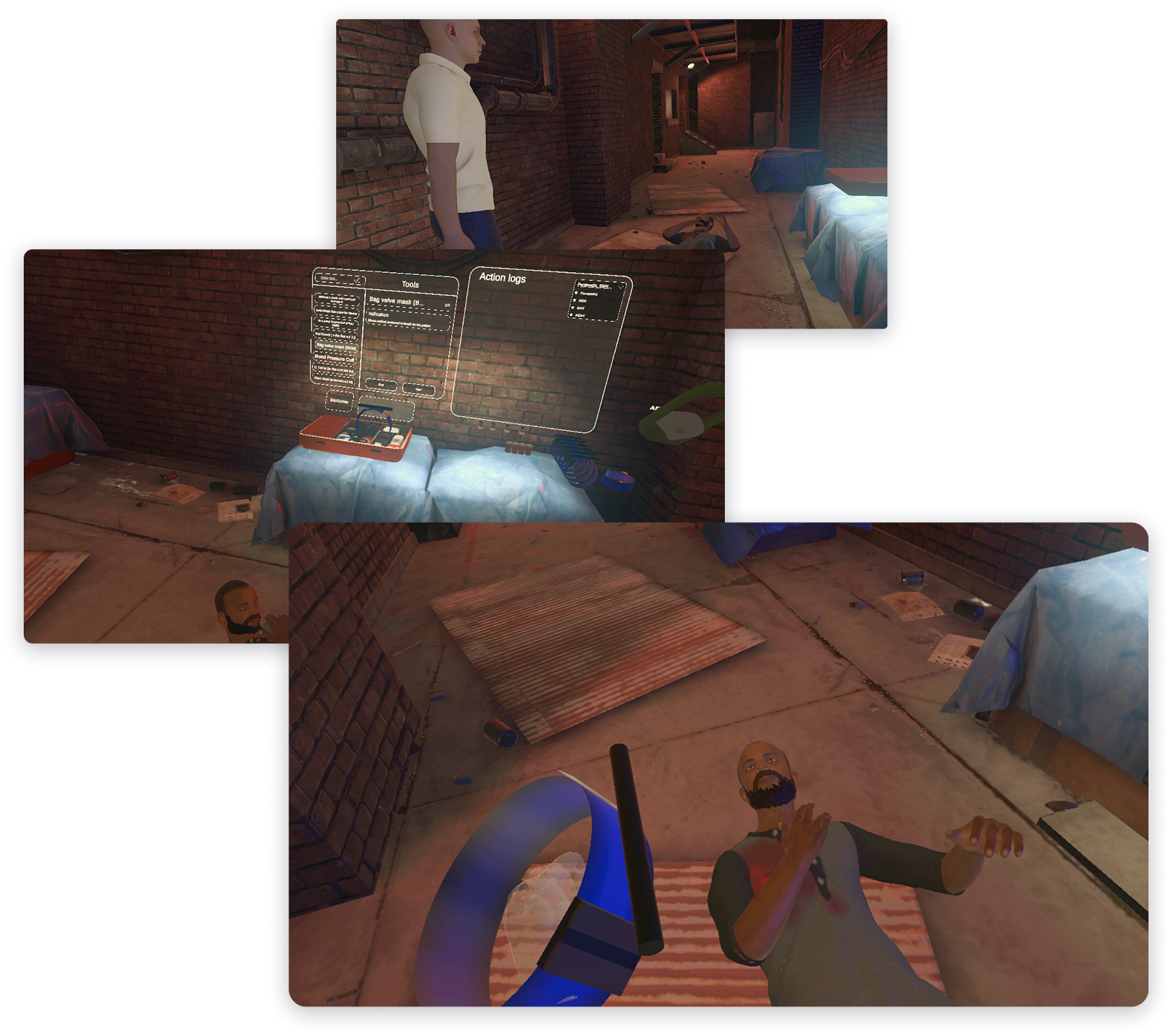

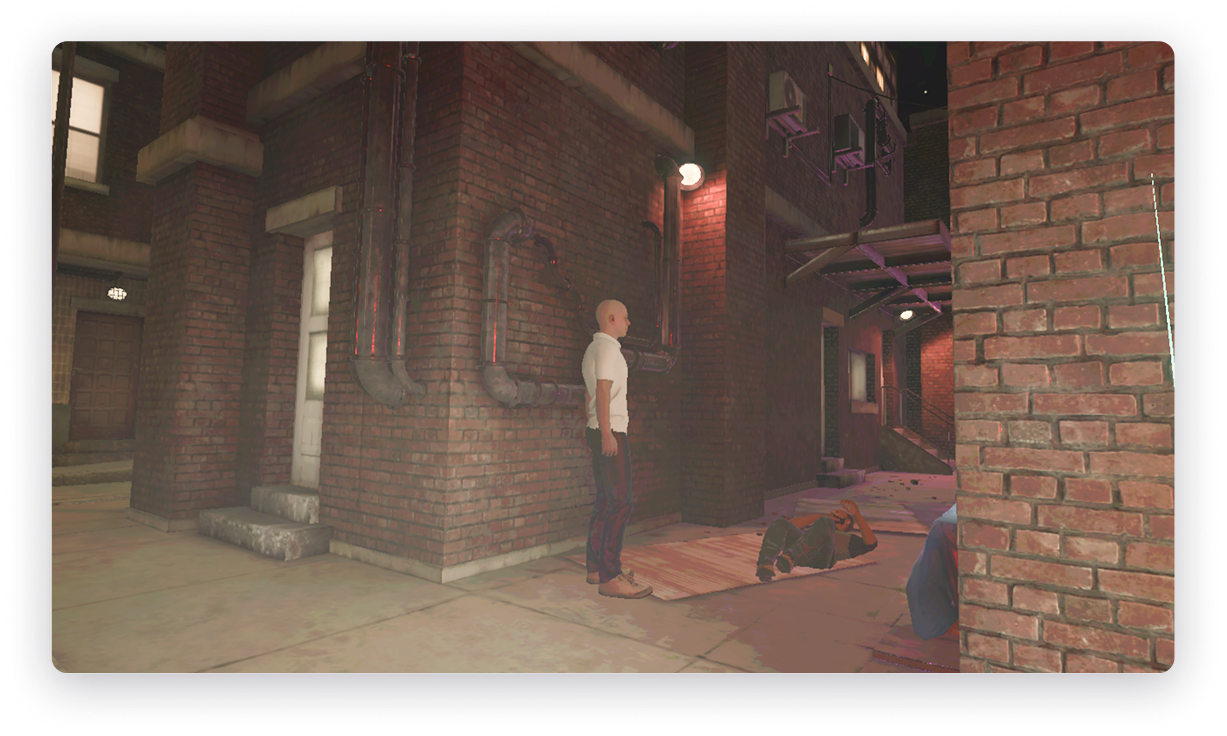

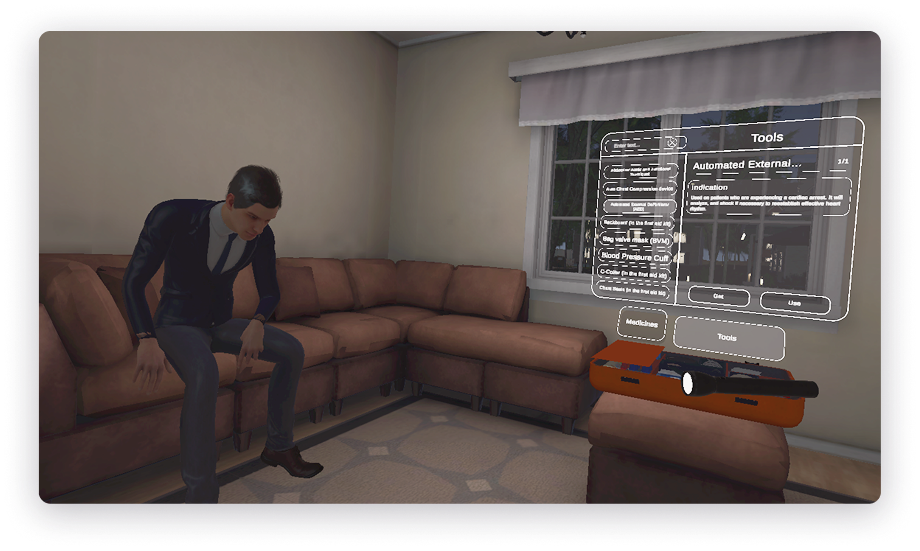

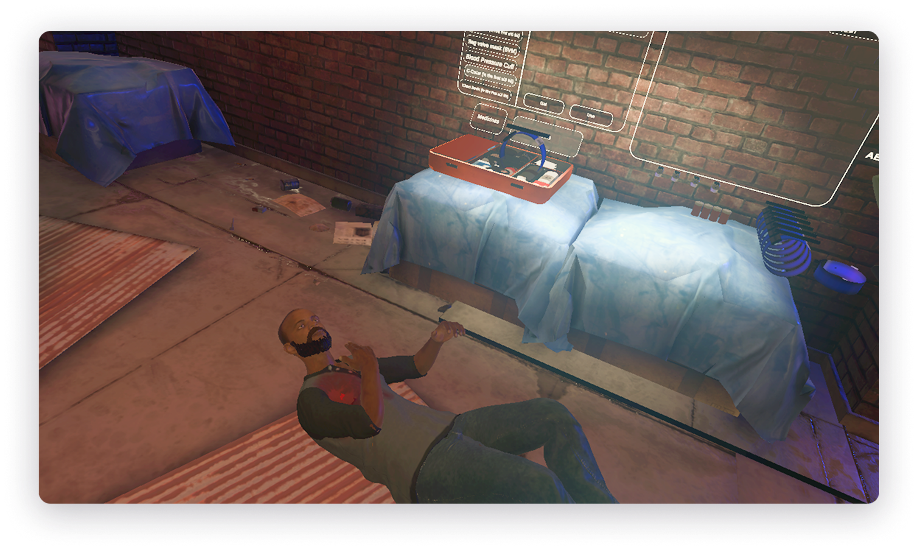

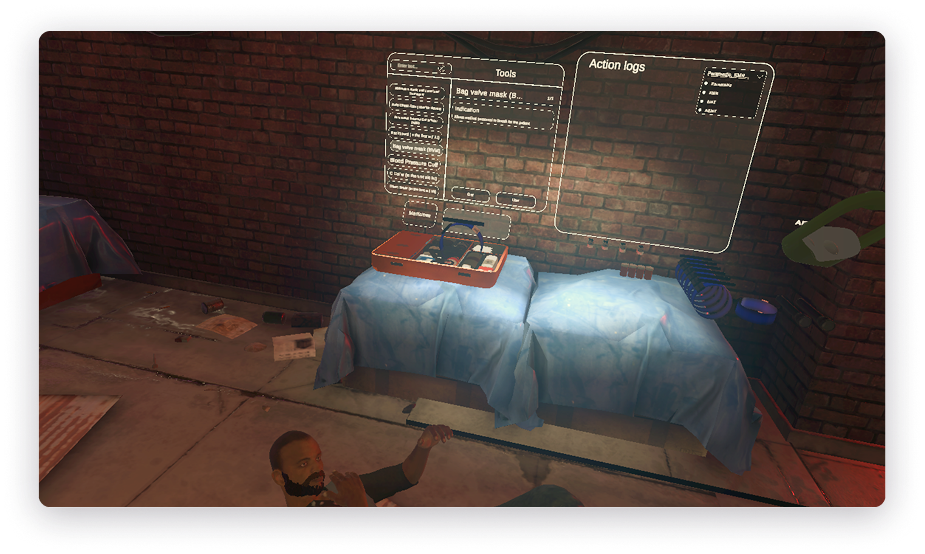

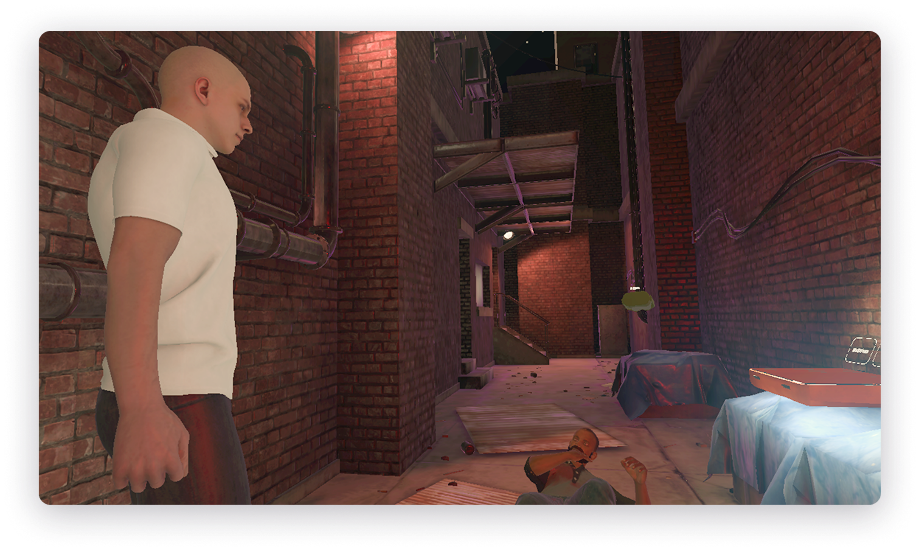

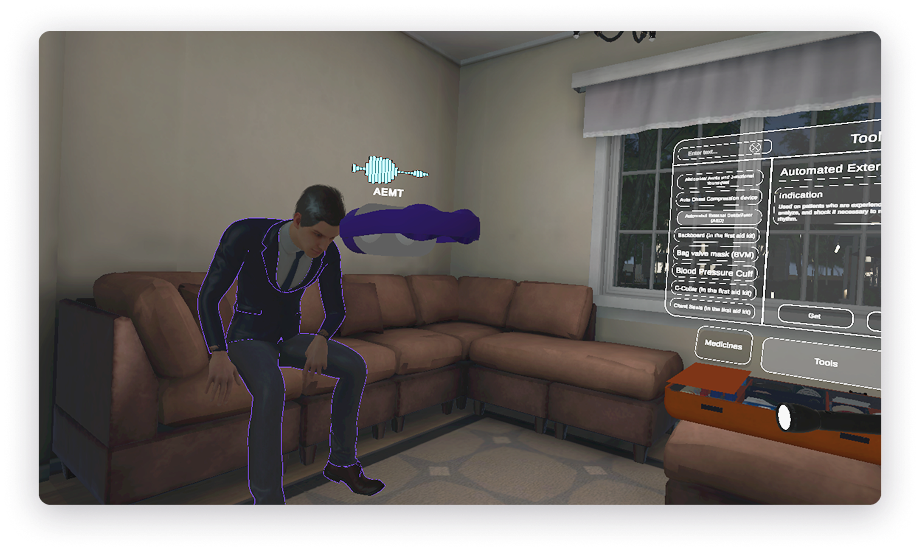

We then developed multiple training scenarios, each tailored to different emergency response roles. Using the OpenAI API, we created AI-driven NPCs that users could interact with through natural language, simulating real-life conversations and responses. It resulted in enhanced immersion and learning, enabling users to practice communication and decision-making in a realistic setting.

Networking and Performance Optimization

We focused heavily on network stability and real-time synchronization to support multiple users and AI scenarios without performance drops. Photon Fusion enabled smooth interactions and reliable voice communication, ensuring a seamless multi-user experience.

UI/UX Design and Testing

Our team took a user-centric approach to designing the interface, focusing on ease of navigation and functionality. We conducted several iterations with the client’s feedback to refine the UI and interaction models, ensuring that the end product was intuitive and aligned with the educational goals of the platform.

4 experts

4 experts 6 months

6 months