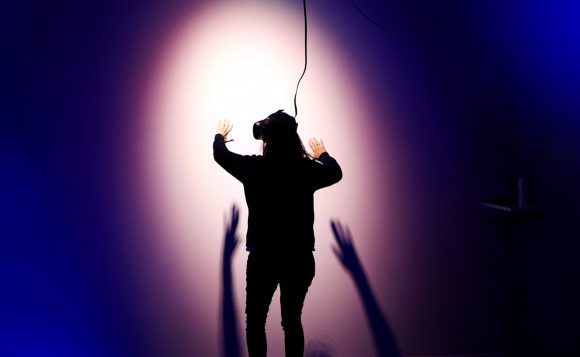

Today, we’re in the very early stages of virtual reality development. Nevertheless, VR is already applied in many different fields, including journalism.

A lot of popular media, e.g., The Guardian, the New York Times, and The Huffington Post, started using VR and 360о video as a new format of storytelling, which helps audiences immerse into the digital version of a specific event.

Evolution of VR Journalism

VR journalism is the innovative way to tell a story in the digital reality, where a real event or a problem is recreated. In general, modern VR journalism is divided into two main categories: 360о videos and VR movies.

Pioneer of VR Journalism

Nonny de la Peña is considered “the godmother of modern virtual reality”. In 2007, a former New York Times journalist founded the Emblematic Group company, which produces VR content with definite linear storylines.

The Hunger in Los Angeles is considered to be the very first VR documentary, released at Sundance Film Festival in 2012. The visitors of the event could see the movie in a VR headset prototype, designed by future Oculus VR founder Palmer Luckey. Audiences got a chance to get immersed in a virtual Los Angeles and see a man in diabetic shock losing his consciousness when he was standing in the line.

“People broke down in tears as they handed back the goggles,” said de la Peña. “That’s when I knew this tool could let viewers experience and understand an event in a completely new way.”

After the movie’s success, Emblematic Group released a number of acclaimed VR documentaries. Greenland Melting, released in 2017, is one of them. According to the plot, a VR headset user gets on a survey shipboard, watches, and estimates the scale of Greenland ice melting. Greenland Melting also became one of the first VR movies shown at the Venice Film Festival the same year.

360o Video Journalism in Mass Media

In the second half of the 2010s, the New York Times and The Huffington Post were among the first media to start complementing their articles with 360о videos. These videos can expand the event’s perceptions, like, for example, the 360о video series Out of Sight by The Huffington Post. The three-part miniseries tell about the life of Congo and Nigeria people, who suffer from three neglected tropical diseases: elephantiasis, river blindness, and sleeping sickness.

With the help of 360о videos, you can also recreate historically important places changed many years ago. In the documental featurette Remembering Emmett Till by New York Times, the location from the old photos and modern ones were assembled with the help of this technology. The main purpose is to show the place of the cruel murder of 14-years old black boy Emmett Till, which happened more than 60 years ago. The teenager was falsely accused of offending a white woman.

The main advantages of using VR in Journalism

One of the main advantages of the VR journalism is the possibility to plunge in the true story with a better understanding of the subject. The most recent sir David Attenborough’s VR documentary First Life is available to watch on Oculus TV. The 11-minute VR featurette tells the story about the beginning of life on Earth and the very first creatures that appeared in the World Ocean. The original 2D movie was released in 2010. And with the help of virtual reality, the headset users can literally get into the digital version of the prehistoric period.

With the help of VR, a headset user becomes an active participant in the story, not a passive viewer. Anthony Geffen, movie producer and Atlantic Productions founder, told about his working experience in creating sir David Attenborough VR movies during his TED Talk.

“We wanted to take you, guys, on a completely submerged journey, with David as your guide. The beauty of – when you’re building these kinds of stories, and we have these kinds of situations – is that you can see how the camera can move around. And we realized we were going to have to do this “on a rails” experience. On a rails means we’re pushing you through the experience, but you’re able to look where you want to,” said Geffen.

Also, virtual reality can enhance viewers’ empathy and improve their understanding of the problem. Oscar-winning documentary Colette by The Guardian was converted into VR and available for Oculus headset users. Thanks to this format, the viewer can soak in the story of Colette Marine-Catherine who fought Nazis in France during World War II.

What You Should Pay Attention to While Creating VR Story

VR is a relatively new tool for storytelling and it’s not completely researched, as professional journalists started working with it only in the first half of the 2010s. Therefore, you should be aware of the possible risks of using VR in journalism:

- The absence of a definite ethical code regulates the demonstration of some materials which may cause witness trauma in people with unstable mental health. Only in North America, approximately 30% of individuals who had witnessed traumatic events developed PTSD;

- virtual reality could serve as a weapon to share misinformation and fake news in potential informational wars. Like deepfake technology, for example. Russians have already used it in the war with Ukraine when they made a fake video featuring Ukrainian president Volodymyr Zelensky surrendering.

Conclusion

Virtual reality is a new digital tool, which is used to tell stories thoroughly and convincingly by many journalists. With the help of VR, you can engage the viewers and cause empathy in them. But, at the same time, this tool can be used as a platform to share fake news and misinformation.